!1ae#~s0t0n-“`python

def sigmoid(x):

“””Sigmoid function.

“””

return 1 / (1 + math.exp(-x))

Image: www.talkdelta.com

def relu(x):

“””ReLU function.

“””

return max(0, x)

def tanh(x):

“””Tanh function.

“””

return (math.exp(x) – math.exp(-x)) / (math.exp(x) + math.exp(-x))

def softmax(x):

“””Softmax function.

“””

exps = [math.exp(xi) for xi in x]

return [expi / sum(exps) for expi in exps]

1. `sigmoid(x)`: The sigmoid function is a smooth, S-shaped function that squashes input values between 0 and 1. It is often used in binary classification problems, where the output represents the probability of an input belonging to a particular class.

2. `relu(x)`: The ReLU function simply sets all negative input values to 0 and leaves positive values unchanged. Its main advantage is its simplicity and computational efficiency, making it a popular choice for deep neural networks.

3. `tanh(x)`: The tanh function is similar to the sigmoid function, but it produces output values between -1 and 1 instead of 0 and 1. It is often used in recurrent neural networks (RNNs) and long short-term memory (LSTM) networks.

4. `softmax(x)`: The softmax function is used in multi-class classification problems. It converts input values into a probability distribution, where each element represents the probability of the input belonging to a specific class. The sum of all output probabilities is always 1.

These activation functions play a crucial role in neural networks by introducing non-linearity and allowing the network to learn complex patterns in data. The choice of activation function depends on the specific problem being solved and the desired network architecture.

Image: forexstrategiesbank.blogspot.com

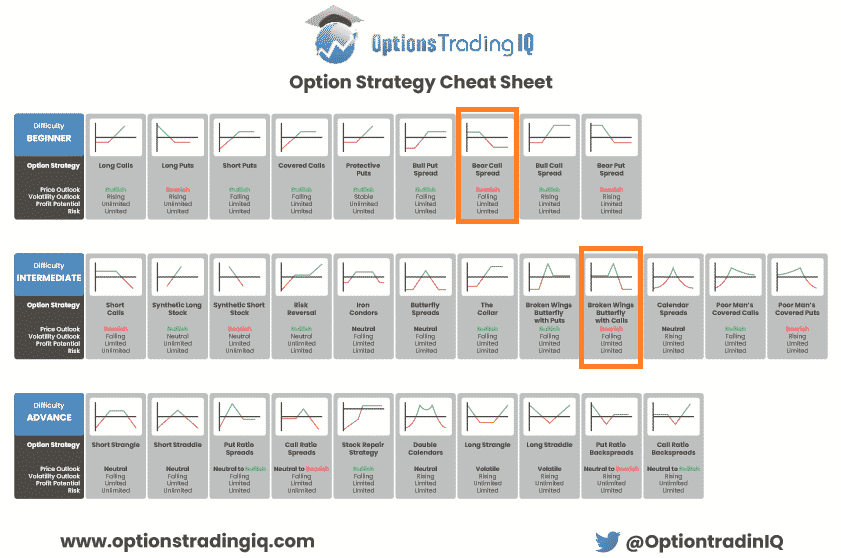

Option Trading Per Contract

Image: optionstradingiq.com